Logic Apps Standard : error loading designer

This morning I experienced an issue loading workflows designer and the error:

System.Private.CoreLib: Could not load type ‘Microsoft.Azure.WebJobs.Host.Scale.ConcurrencyManager’ from assembly ‘Microsoft.Azure.WebJobs.Host, Version=3.0.22.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35’.

Value cannot be null. (Parameter ‘provider’)

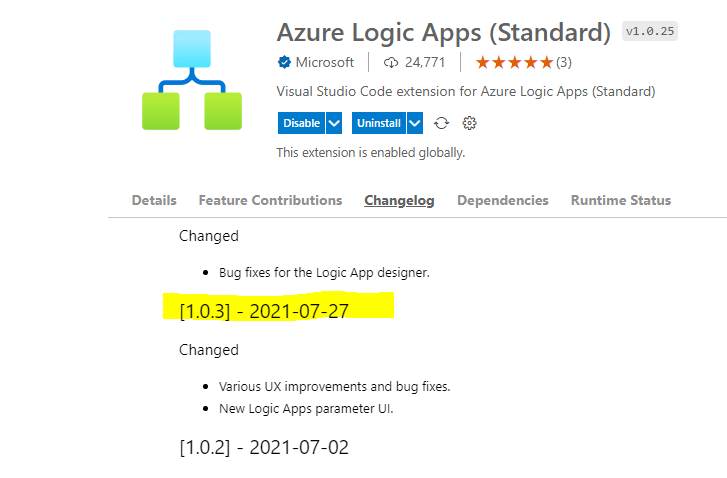

No recent change on Logic Apps extension:

Azure function core tools installed : 3.0.22.0

=>So not relayy understand what’s causing this behaviour

When I check the content of Microsoft.Azure.WebJobs.Host, Version=3.0.22.0 dll, the ConcurrencyManager class is missing:

Solution :

I installed the latest 3.x version of Azure function core tools : func-cli-3.0.4585-x64.msi

checking the webjob assembly, the missing class is present:

Hope this post can help save time, but this versionning dependency between Logic Apps <=>Azure function becomes a nightmare!!!!

Logic Apps Standard Managed Identity for API Connection using Access Policy With Azure Bicep

Managed identities provide an identity for applications to use when connecting to resources that support Azure Active Directory (Azure AD) authentication. There are 2 types of managed Identities, System assigned and User Assigned. This article will only focus on System Assigned one.

System Managed Identity

- Created as part of an Azure resource (for example, Azure Virtual Machines or Azure App Service).

- Shared life cycle with the Azure resource that the managed identity is created with. When the parent resource is deleted, the managed identity is deleted as well.

- Can’t be shared. It can only be associated with a single Azure resource.

Steps

- Enable System Identity when creating Logic Apps

- Give identity access to API connection using an Access Policy

Enable System Identity when creating Logic Apps

This article will not show how to create a Logic Apps Standard using Bicep; You can check this great Demo from Samuel Kastberg.

When creating your Logic Apps, add the Identity object to your Bicep code :

identity:{

type:'SystemAssigned'

}if deployment has successfully created LA, on Azure portal the system identity must be enabled:

Give identity access to API connection using an Access Policy

resource MyLAAccessPolicy 'Microsoft.Web/connections/accessPolicies@2016-06-01' = {

name: '${MyConnection.name}/${MyLA.name}'

location: resourceGroup().location

properties: {

principal: {

type: 'ActiveDirectory'

identity: {

tenantId: subscription().tenantId

objectId: MyLA.identity.principalId

}

}

}

}Important :

The access policy name must have the fomat : Connection name + / + Logic Apps name or ID

Recommended reading

Authenticate access to Azure resources with managed identities in Azure Logic Apps

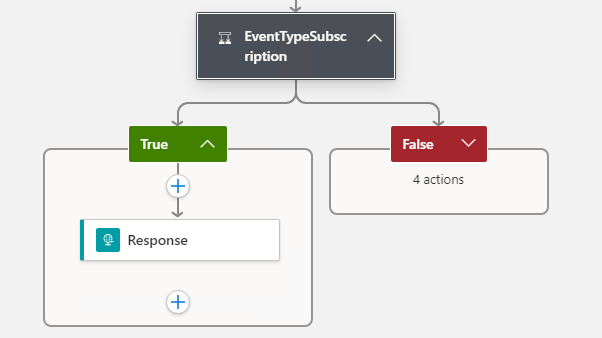

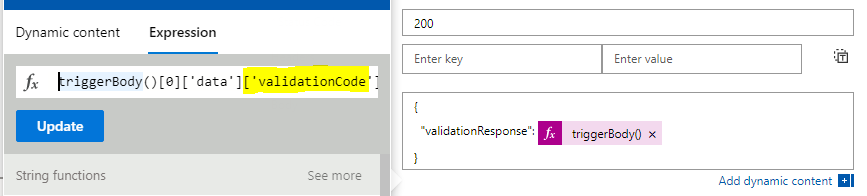

Endpoint validation with Event Grid events subscription & Logic Apps Standard

When subscribing a Logic Apps Standard workflow as Webhook to push event grid events, the workflow must participate to handshake validation as a proof that you are the owner of the endpoint. So the workflow will manage a branch dedicated to the handsjhake validation.

The validation can be :

- Synchronous handshake: At the time of event subscription creation, Event Grid sends a subscription validation event to your endpoint. The schema of this event is similar to any other Event Grid event. The data portion of this event includes a

validationCodeproperty. Your application verifies that the validation request is for an expected event subscription, and returns the validation code in the response synchronously. This handshake mechanism is supported in all Event Grid versions. - Asynchronous handshake: In certain cases, you can’t return the ValidationCode in response synchronously.Starting with version 2018-05-01-preview, Event Grid supports a manual validation handshake. If you’re creating an event subscription with an SDK or tool that uses API version 2018-05-01-preview or later, Event Grid sends a

validationUrlproperty in the data portion of the subscription validation event. To complete the handshake, find that URL in the event data and do a GET request to it. You can use either a REST client or your web browser.

this article will focus on Synchronous handshake.

When adding an endpoint subscription without a handshake the following error is raised :

Webhook validation handshake failed for https://xyzxyz.azurewebsites.net/runtime/webhooks/workflow/scaleUnits/prod-00/workflows/xyzxyz/triggers/When_a_resource_event_occurs/paths/invoke. Http POST request retuned 2XX response with response body . When a validation request is accepted without validation code in the response body, Http GET is expected on the validation url included in the validation event(within 10 minutes)

The following workflow shows how to handle the validation handshake:

the control check the type of the event:

when validation requested, the following event payload is sent to the webhook endpoint:

as a response the validationCode must be returned:

this way, the handshake will be validated synchrounously when creating the event subscription.

Feel free to comment, suggest or ask if you have any issue!!!

Azure DevOps : Automate Event Grid Subscription for Logic Apps Standard Workflow

Azure event grid is a great candidate for event based exchanges. It allows push notifications to many types of end points :

This article show how you can automate an event grid subscription for a Logic Apps standard Workflow when a file is dropped to a storage account container.

First of all, an Event grid system topic must be created. in my case I used Bicep for my IaC :

resource SASysTopic 'Microsoft.EventGrid/systemTopics@2021-12-01'= {

name: '${MySA.name}-systopic'

location: resourceGroup().location

properties:{

source:MySA.id

topicType:'Microsoft.Storage.StorageAccounts'

}

identity:{

type:'SystemAssigned'

}

dependsOn:[

MySA

]

}this must be executed only once whith infrastructure provisionning pipeline :

To register a subscription to this system topic when a File is created within a specific container, the following powershell can be embedded to an « Azure CLI » on a a release pipeline. But why we cannot create this subscription within IaC? the answer is within logic apps standard we can have more than one workflow, so even if the service is created, no url can be calculated before workflows are deployed. With consumption sku, A logic Apps service = only one workflow.

$subscription = "$(SubID)"

$ResourceGroupName = "$(ResourceGroupName)"

$sysTopicName = "$(SysTopicName)"

$eventSubName = "$(EventGridSubName)"

$LogicAppName = "$(LogicAppsName)"

$MyWorkflow = "$(WorkflowName)"

$workflowDetails = az rest --method post --uri https://management.azure.com/subscriptions/$subscription/resourceGroups/$ResourceGroupName/providers/Microsoft.Web/sites/$LogicAppName/hostruntime/runtime/webhooks/workflow/api/management/workflows/$MyWorkflow/triggers/When_a_resource_event_occurs/listCallbackUrl?api-version=2018-11-01

$endPointURL = ($workflowDetails | ConvertFrom-Json).value

Write-Host "Logic App url : $endPointURL"

$restEventSubURL = "https://management.azure.com/subscriptions/$subscription/resourceGroups/$ResourceGroupName/providers/Microsoft.EventGrid/systemTopics/$sysTopicName/eventSubscriptions/$eventSubName"

$restEventSubURL = $restEventSubURL + '?api-version=2021-06-01-preview'

Write-Host "Event Sub url : $restEventSubURL"

$body="{'properties': {'destination': {'endpointType':'WebHook','properties': {'endpointUrl':'$endPointURL'}},'filter': {'isSubjectCaseSensitive': false,'subjectBeginsWith': '/blobServices/default/containers/containerName/blobs','includedEventTypes': ['Microsoft.Storage.BlobCreated']}}}"

az rest --method put --uri $restEventSubURL --body $body --headers Content-Type='application/json'This script, first get the Logic Apps workflow url, more details here, and create a subscription for blob creation to notify a Webhook : Logic apps end point url with Event Grid connector as a trigger.

The rest API is the only way that works at the moment. AZ CLI experience an issue that borks endpoint url at « & » as confirmed within this thread. An issue is also created for AZ CLI team on GitHub.

At the end, this subscription must be validated to confirm that you are the owner of the endpoint. You can do that by calling the validate url sent to your endpoint when the script registration is executed :

just copy/past this url in the browser, but be quick before its expiration 🙂 :

I’ll try to show how to handle this validation handshake in a future article with a synchronous manner.

Retrieve Logic Apps (standard) Workflow URL with Powershell

I was looking how to get a Logic Apps workflow url to automate an Event Grid subsciption with Webhook and I came across this great article from Mike Stephenson.

Unfortunately, this only works for Logic Apps Consumption. The aim of this article is to share the way to get the workflow URL for Logic Apps Standard. To learn more about Logic Apps Standatd : Link1

The following PS use the management API to return the workflow url:

$subscription = 'your subscription ID'

$ResourceGroupName = 'your RG name'

$LogicAppName = 'your Logic Apps name'

$MyWorkflow = 'your workflow name'

$workflowDetails = az rest --method post --uri https://management.azure.com/subscriptions/$subscription/resourceGroups/$ResourceGroupName/providers/Microsoft.Web/sites/$LogicAppName/hostruntime/runtime/webhooks/workflow/api/management/workflows/$MyWorkflow/triggers/When_a_resource_event_occurs/listCallbackUrl?api-version=2018-11-01

Write-Host $workflowDetails

NB: in my case I’m using Event Grid trigger « When_a_resource_event_occurs », this is to be changed for other trigger.

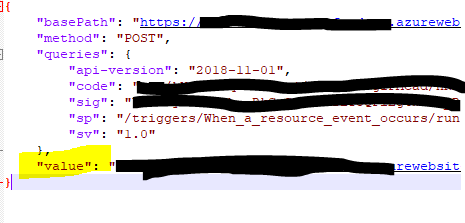

this call retrun the following json result :

to parse the json result and return only the the workflow url :

$url = ($workflowDetails | ConvertFrom-Json).value

Write-Host $urlHope this can help!!!

Logic Apps PG 1:1 meeting

I had thre pleasure to exchange in a 1 to 1 meeting of 30 minutes with Azure Logic Apps PG and especially with Divya Swarnkar.

Main points of this exchange:

-Some of my financials clients where data must reside only within their sites, are not encouraged to migrate their Biztalk flows to Azure. With the new Logic Apps : Azure function runtime,a workflow can be hosted on a a Docker container, so the clients can use their infra. But how about using enterprise connectors ?

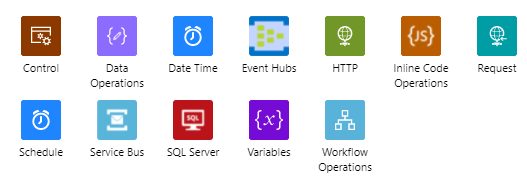

=>To have only running on the local runtime, only « Built-in connectors » must be used, Azure connectors run only on Azure, so connection/data crosses Azure network to connect to partners. The good news is that the list of Buil-in connectors can grow-up depending the need of the usage and Divya suggest what kind of connectors is highly used and my answer is : at least the connectors provided by Biztalk: SAP, File, FTP/SFTP, MQSeries

So far,available »Built-in » connectors are:

-As a baby Biztalk, i’m comparing what was offered by Biztalk to plan how existing solutions can be upgraded to Logic Apps , and what can be the alternative of BAM for business monitoring ?

=>For business monitoring, Azure log Analytics data can be used. Within the workflow we can define Tracked properties on actions (equivalent to Biztalk promoted properties) and they will be injected and retrived by queries on log analytics data

Finally, I want to thanks LA PG team for this great initiative and encourage them to keep this kind of short 1:1 exchanges.

Self provision a Biztalk developer machine Part II : Azure DevOps

In this second part (Part I) will see how to create an Azure DevOps pipeline to create a new VM based on ARM template.

1-Create ARM template

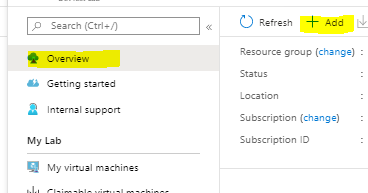

To start, let’s create a template of a Biztalk 2016 VM. From the lab previously created, click « Add » on the « Overview » blade:

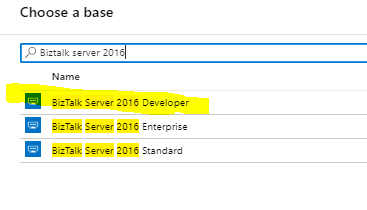

and search for Biztalk server 2016 Developer image (sql server + visual studio pre-installed):

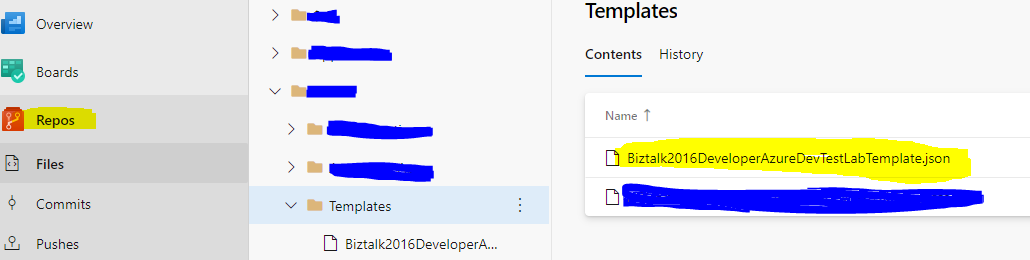

select the template content and save it locallyu as a json file : Biztalk2016DeveloperAzureDevTestLabTemplate.json

2-Add template to git

This template must be added to a git repository for reference within the Azure DevOps pipeline :

3-DevOps pipeline

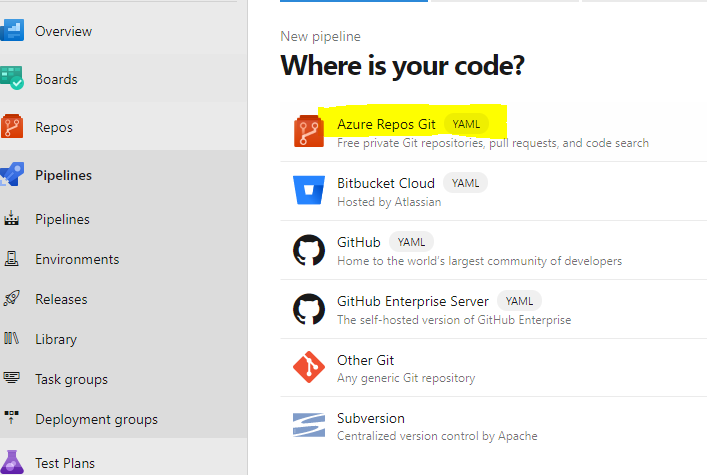

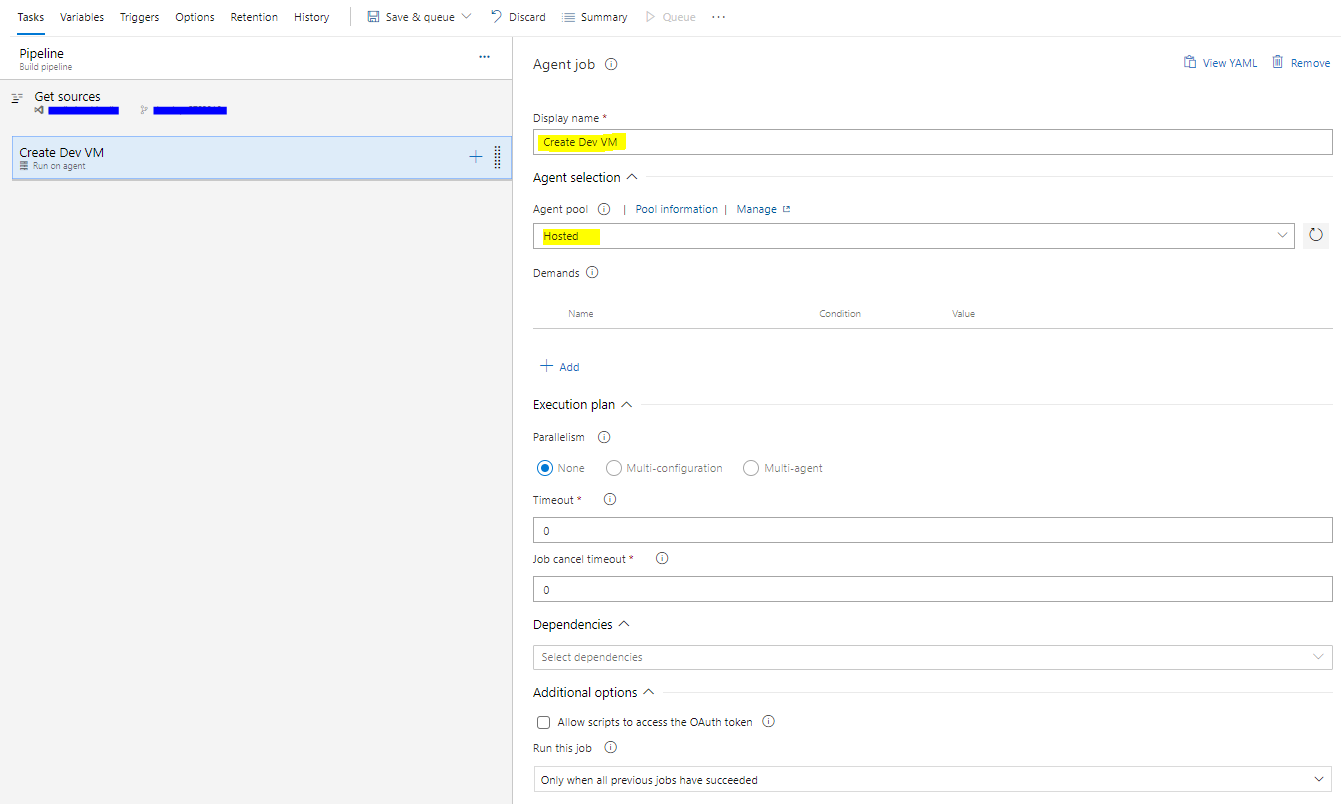

Create a new pipeline :

select the git repository where the json template were added:

the pipeline will run on a hosted agent :

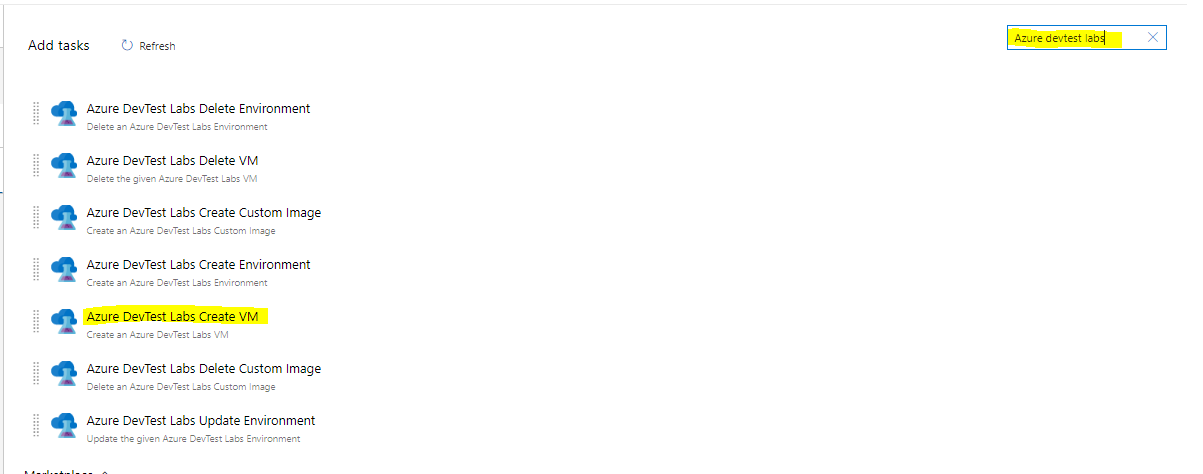

add « Create Azure DevTest Labs VM » task :

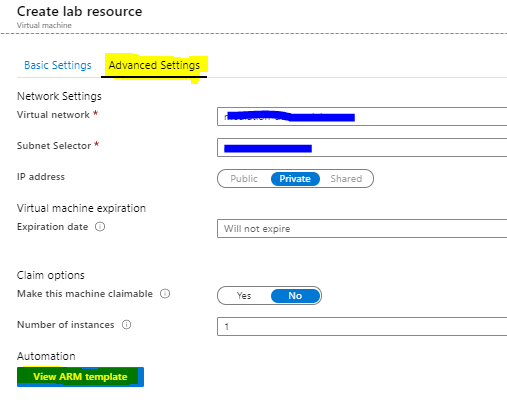

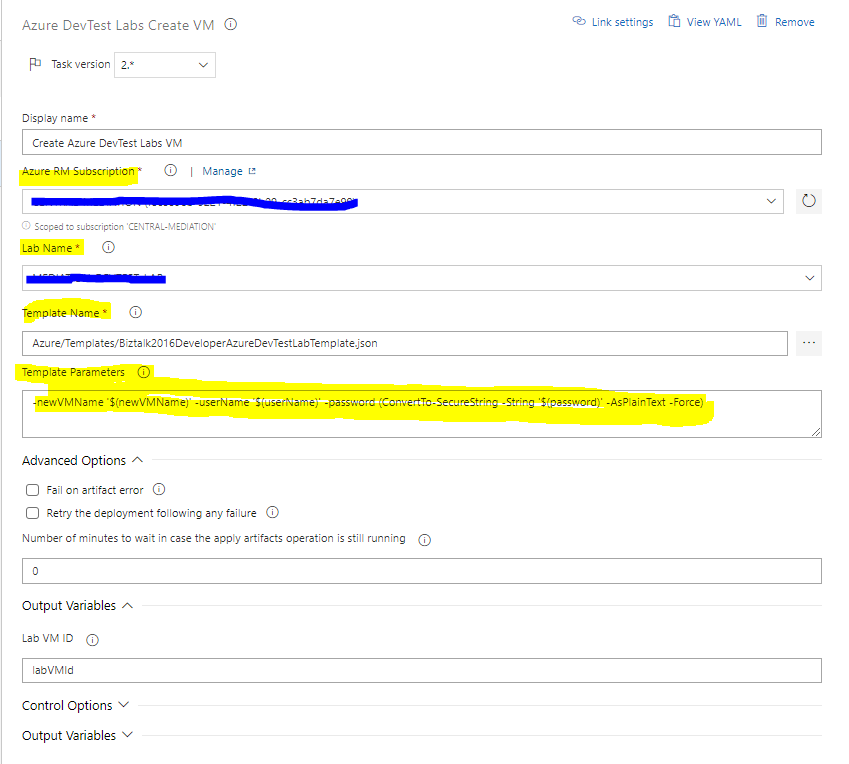

Configure the task :

-Select your Azure subscription

-The Lab created in part I

-ARM template from Git

-Template parameters

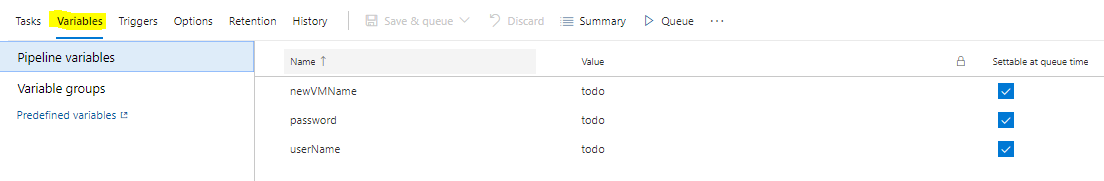

for template parameters, I created 3 pipeline parameters to prompt the user to set VM name, user name and password:

the value of these parameters will replace the template’s parameters.

Now, all you need to do is to share the pipeline link with each new joiner to create a new developer environment:

Hope this blog post help you to automate Dev VMs creation.

Self provision a Biztalk developer machine Part I : Azure DevTest Labs

The aim of this post is to share a quick solution to create a developer machine for a new joiner within a team. Previously we need to share a VHD image of one of the developpers team. So the new joiner spent a time to clean his VM, but sometimes we forget some settings like setting the source control credentials. By using Azure DevTest Labs and Azure DevOps pipeline a new joiner can create by himself his VM with all the necessary artifacts to start developping, and delete unused ones when a developper leave the team.

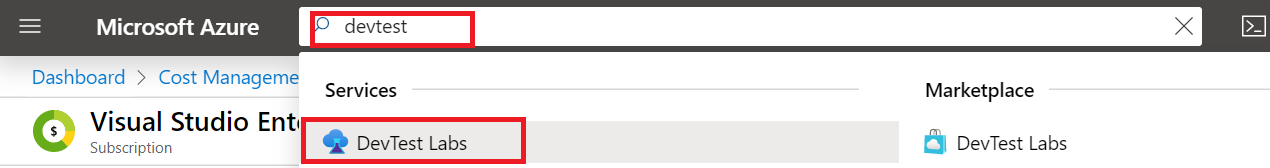

First of all, we need to create an Azure DevTest labs resource:

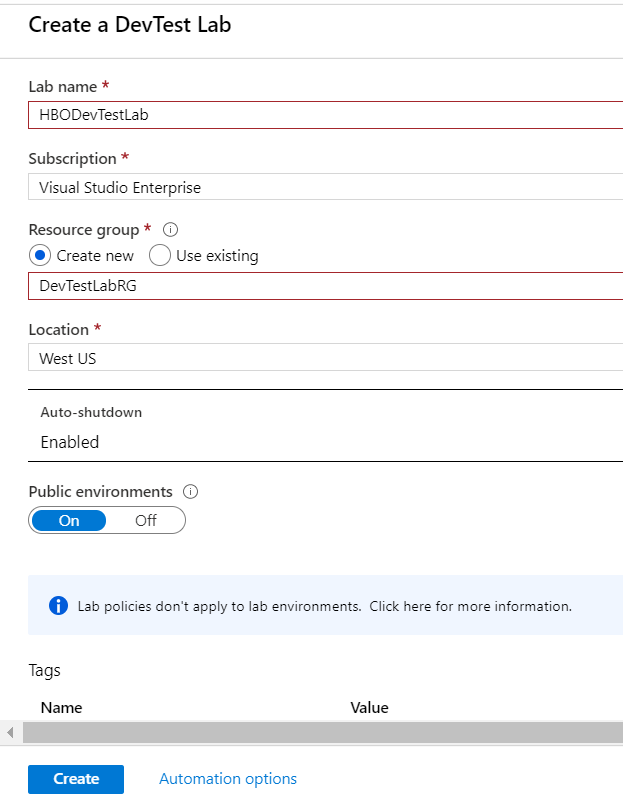

Click Create button and set lab’s informations:

NB : keep the auto shutdown enabled for cost optimisation. This option can be updated after.

that’s all for this first part. In Part II, will see how we can use DevOps pipelines to create a new VM within the created lab.

Why you have to think 10 times before using Logic Apps Liquid Transform connector?

For one of my recent projects, I needed to create advanced transform messages in a Logic Apps workflow. The choice was naturally to use the liquid transform connector to map / transform JSON messages.

The headache start by using some really basic filters : Minus, Plus, Split … Another interesting feature that is not supported, in nested templates also

Doing some research, I find other customers suffering from the same problems:

A lot of Shopify filters are buggy or not yet supported by the DotLiquid version used by Logic Apps. Trying to find the used version of DotLiquid used by Logic Apps, I contacted Microsoft Product Group (Thanks to Derek Li & Rama krishna for the reactivity) to know more about the used version. Actually Logic Apps used a specific version used internally and not populated on DotLiquid GitHub Repo : 2.0.361 as mentioned in the following thread. The good news is that the PG are planning to upgrade this version asap:

=>The current ETA for this change is March 2022 but could be changed due to unforeseen circumstances.

As a Biztalk specialist, I usually use xslt for transformation for real complex scenarios, it can be a real blocker to go forward and implement some workflows with complex transformation within Logic Apps.

Considerations when planning to use Liquid within Logic Apps:

-For math operations : ensure that your JSON fields are not strings surrounded by «

-To guess which filters will be supported , I said (maybe I’m wrong, but it was helpfull 🙂 ) a version < 2.0.361 from public repo will help. I used version 1.6.1

-If you want to use the latest DotLiquid version , you can run the transformation within an Azure Function and return the result as a JSON or string

-For xml messages, I advice to use XSLT instead of Liquid

Hope this blog post help others to save time when implementing complex transformations

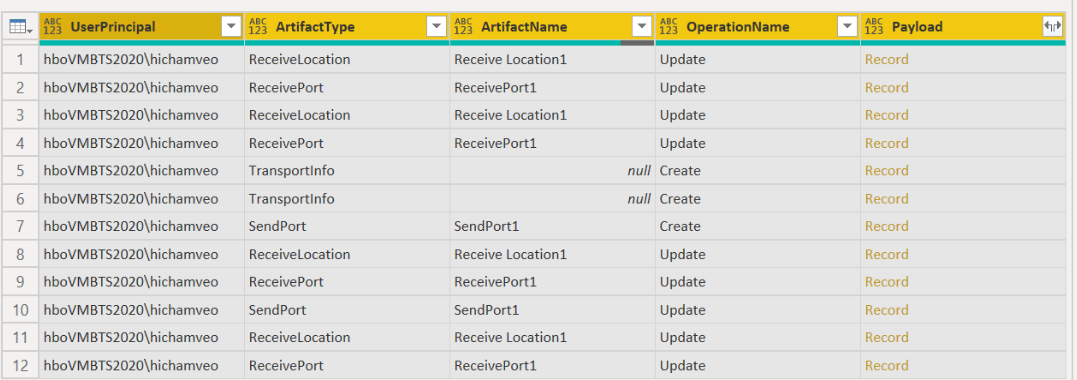

Biztalk server 2020 : View Audit log of common management operations with Power BI

Biztalk server 2020 just released with some usefull features. Among these features is Audit log to track Biztalk artifacts chnages. Within this blog post i’ill try to explain how to ingest and display the API data in a human way with MS Power BI.

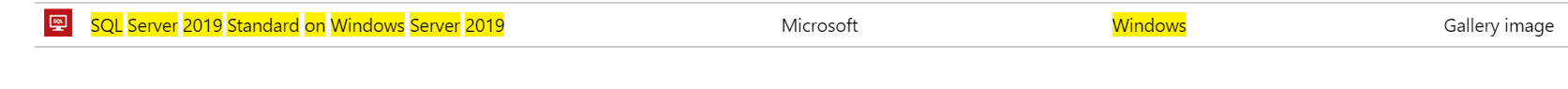

The first thing, is to install Biztalk 2020. In my case i choosed to create a VM on Azure from a built in image on the Azure Marketplace :

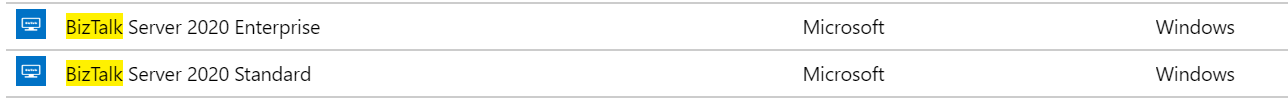

but few days after my vm creation, Microsoft published an image for BTS 2020:

for installation guide, it’s the same as the previous version of Biztalk. You can refer to the installation procedure in this link.

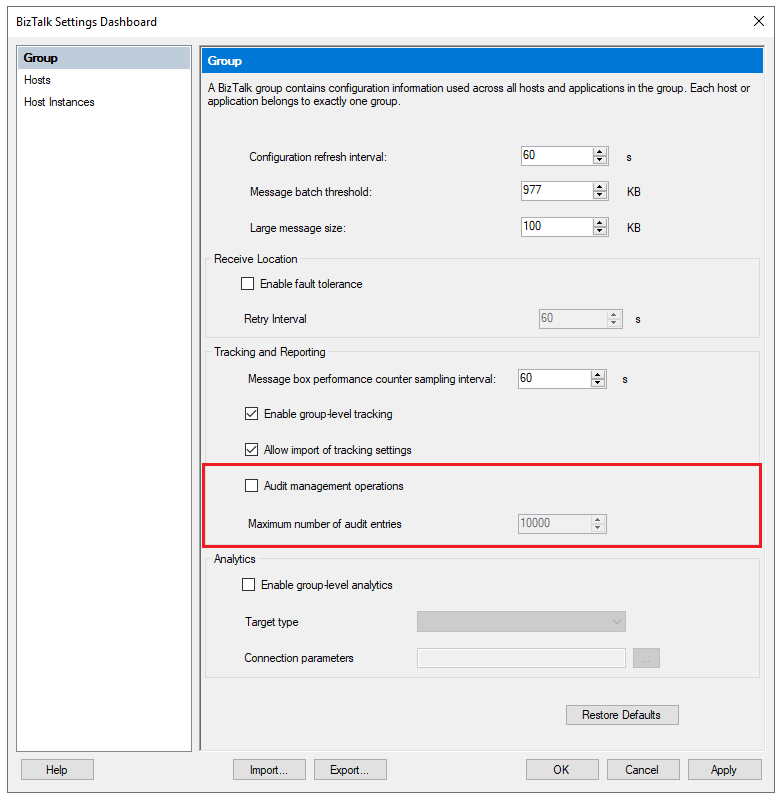

After installing and configuring yor Biztalk environnement, we ned to enable audit management operations on Biztalk concole:

the new API operation that lists operations audit is reached from the following url:

http://localhost/BizTalkOperationalDataService/AuditLogs

for the first access, i got an access denied, so i enabled anonymous authentication and gived my user priviliges on the iis site folder.

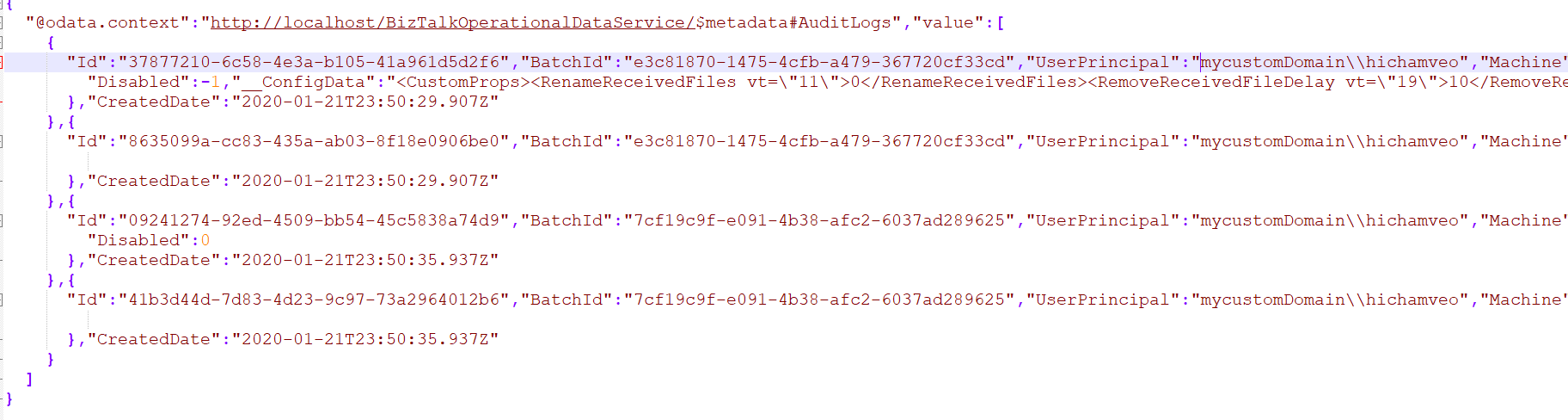

as a result of the API operation management, a json file is returned :

to display the API result in a humain format, I installed Power BI Desktop.

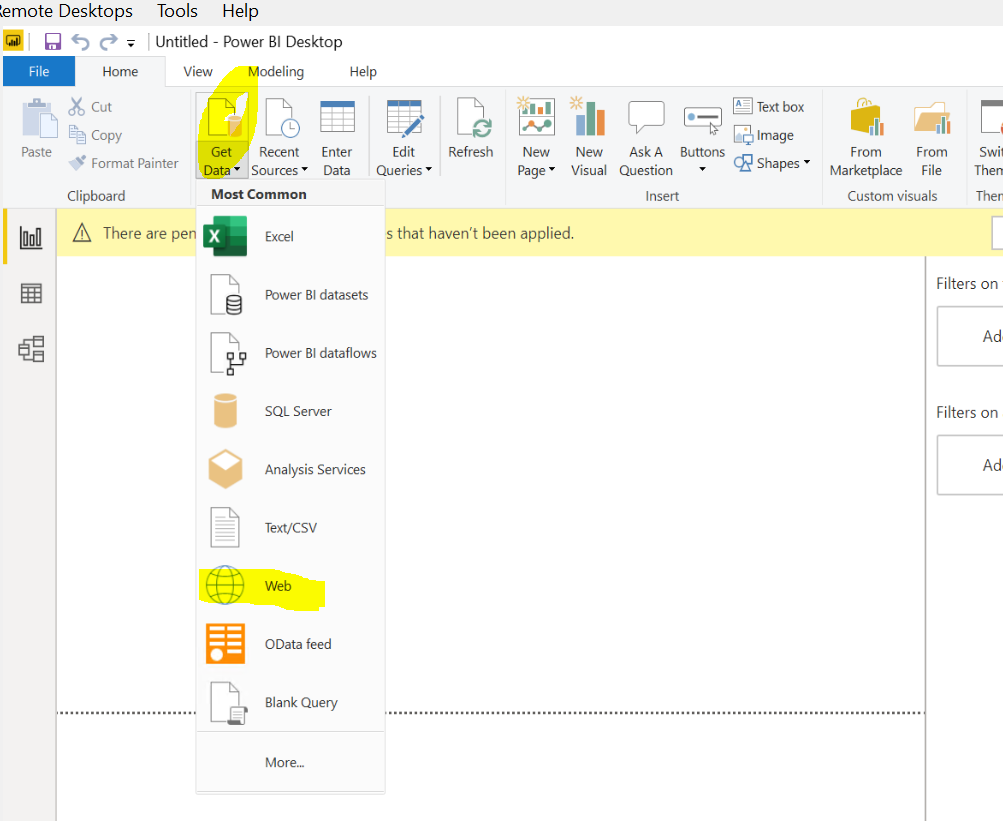

On Power BI client (no need to create an account), click on :

1-Get Data

2-Web

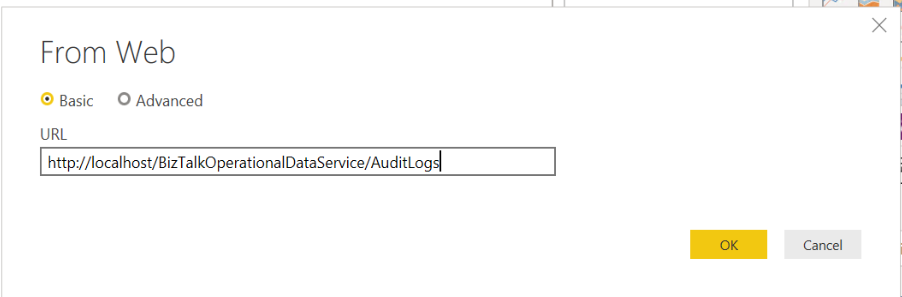

3-Copy/past the audit log API url and ok:

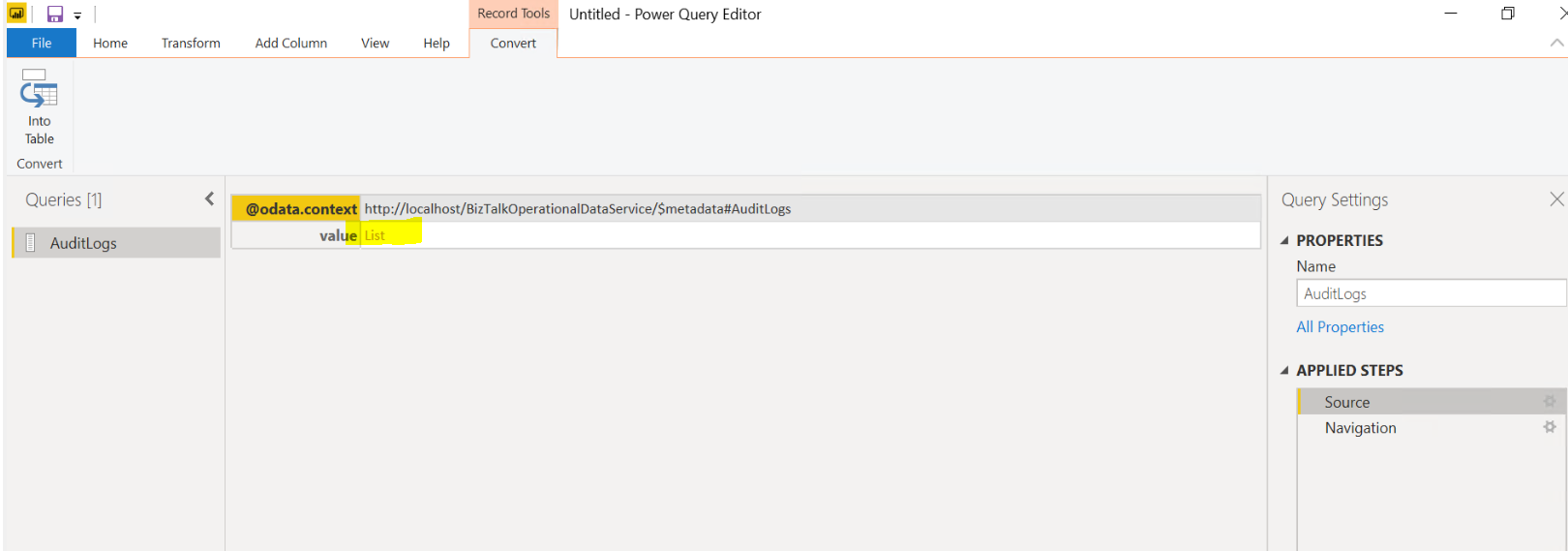

4-a new window opens , click on List value:

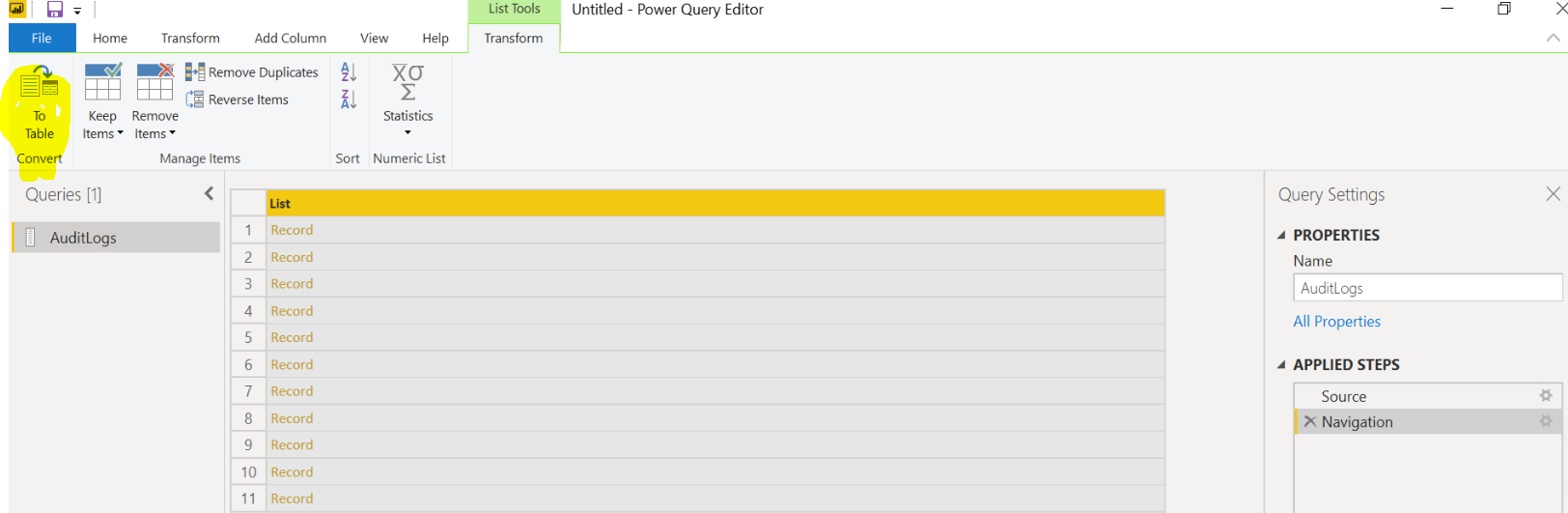

5-convert this list record into a table:

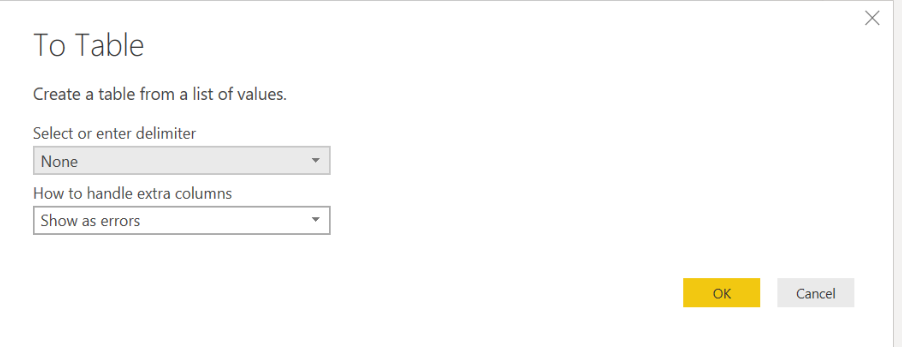

on create table pop-up, leave default values:

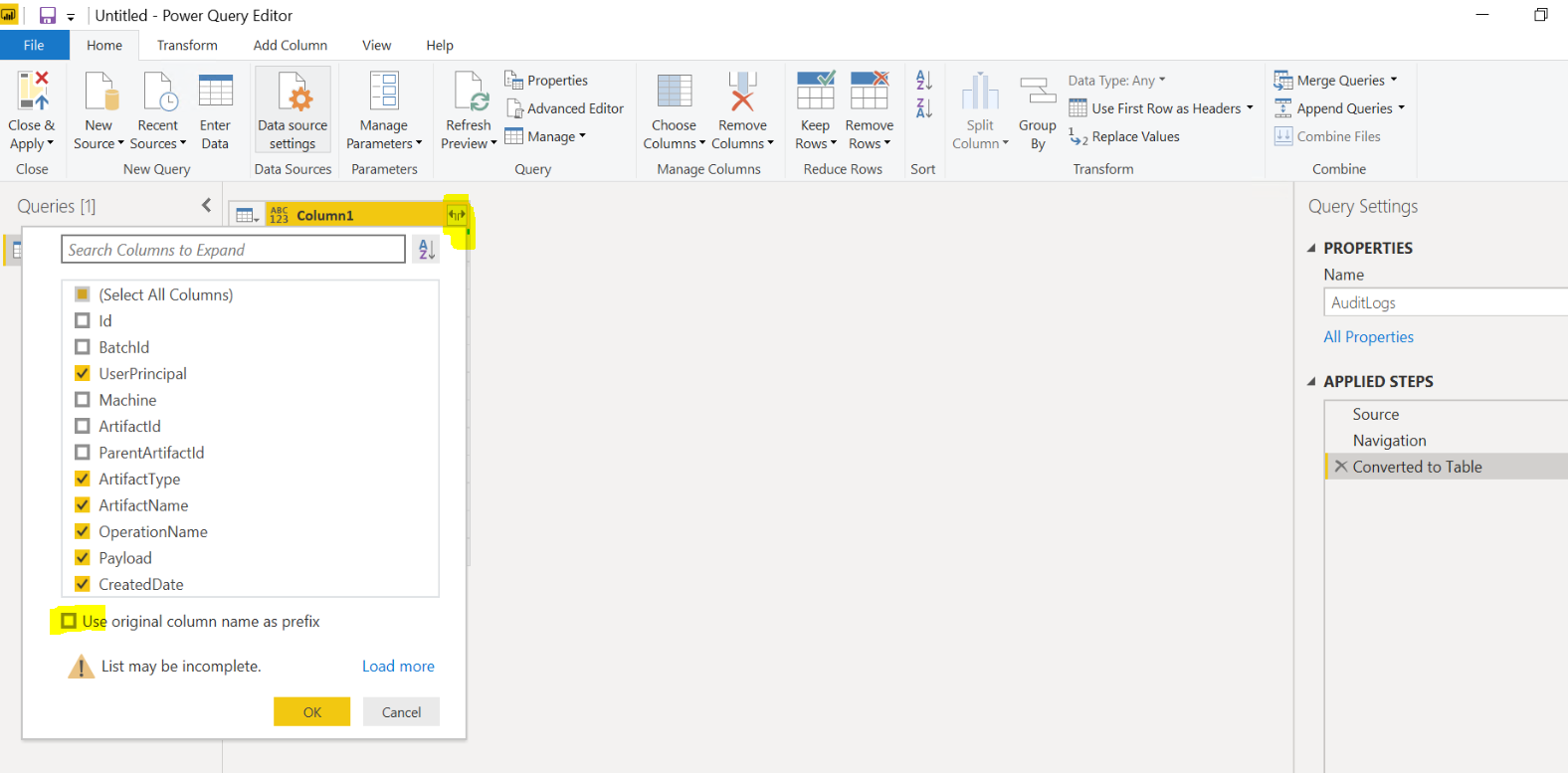

6-custom columns :click on the button from the top right of the Column1 and choose the following columns :

–Userprinipal :User who performed the operation

-ArtifactType: Type of artifact on which operation was performed, for example SendPort, ReceivePort, Application etc.

-ArtifactName: User configured name of the artifact, for example, FTP send port.

-OperationName: Action performed on the artifact, for example Create.

The following table summarizes possible operations name on different artifacts:

| Artifact type | Operation name |

|---|---|

| Ports | Create/Update/Delete |

| Service Instances | Suspend/Resume/Terminate |

| Application resources | Add/Update/Remove |

| Binding file | Import |

-Payload: Contains information about what is changed in JSON structure, for example, {"Description":"New description"}.

-CreatedDate: Timestamp when the operation was performed.

as a result, we get the list of changes by artifact ype and the user who operated the change:

for more details on which properties are changed, you can click Record link from the Payload Column.

This is are very usefull informations for huge Biztalk group with a large administrators team.

Thats’s all for this lightweight version. For a V2 we can parse the payload column based on the the artifact type because the content will be different.

Commentaires récents